What is it?

Google's Gemini 2.5 Pro, developed by DeepMind, is being hailed by some commentators as the most advanced of AI large language models currently available. Launched experimentally on March 25, 2025, it builds on the legacy of previous Gemini models, but also offers some significant improvements in intelligence and functionality. This model is designed in particular to excel in complex problem-solving, making it a powerful tool for various applications. Google describes it as "our most intelligent AI model" and at the time of writing it outperforms competitors in Chatbot Arena's benchmarking tests.

One of the most noted features of Gemini 2.5 Pro is its "thinking model" capability: this advanced reasoning functionality allows the AI to analyse information, draw logical conclusions, and incorporate context and nuance before responding. Thinking LLMs are the next step after Chain-of-Thought models (which articulate their line of reasoning step by step), Thought Preference Optimisation (TPO) models produce multiple answers internally before judging which are the most and least appropriate, an approach that can lead to enhanced performance and accuracy. This, claims Google, makes Gemini 2.5 Pro adept at tackling intricate challenges and making informed decisions.

Additionally, Gemini 2.5 Pro is a multimodal large language model (LLM), which means that it is capable of processing and analysing text, images, audio, and video without requiring different models or modes. Its high levels of performance are evident in benchmarks requiring advanced reasoning, such as AIME 2025 and GPQA.It scores 18.8% on Humanity's Last Exam without external tool use, highlighting its superior reasoning and analytical capabilities, and just behind OpenAI's o3 model (20.1%). As the author's of HLE point out, LLMs tend to score rather low on the exam at the moment, but they expect 50% rates by the end of 2025 which will demonstrate expert-level responses to closed questions although not research autonomy or artificial general intelligence (AGI).

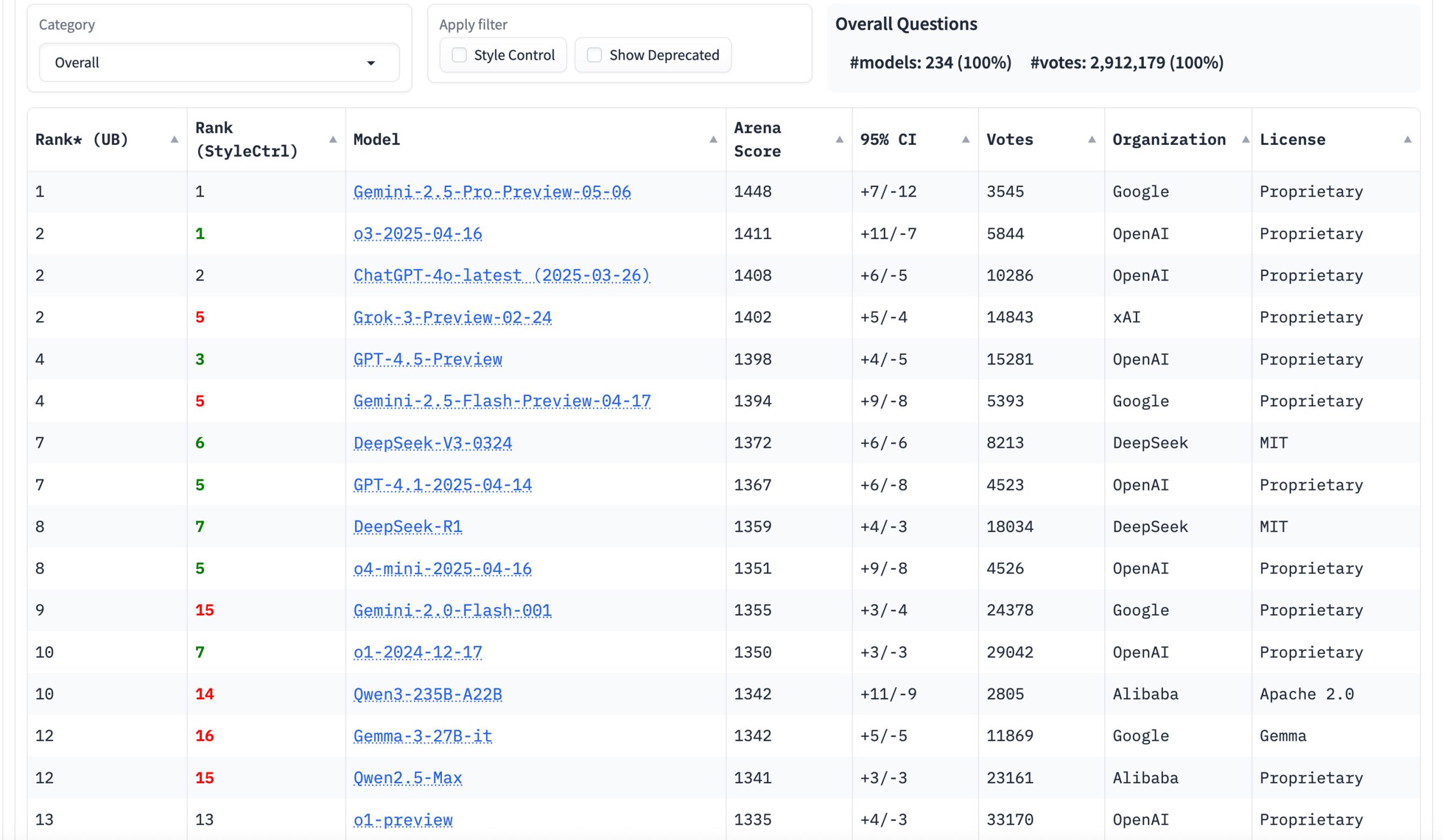

The Chatbot Arena benchmark leaderboard as of May 2025 . (Source: Chatbot Arena.)

What can it do?

Compared to its predecessors, the latest version of Gemini is better at creating visually appealing web applications and creating agentic applications, including code transformation (modfications to source code to improve performance and/or efficiency) and editing. It achieved a score of 63.8% on SWE-Bench Verified with a custom agent setup and is currently leading the WebDev Arena Leaderboard, which evaluates human preference for models that build functional and aesthetically pleasing web apps. The CEO of DeepMind has praised it as "the best coding" model Google has ever developed.

Gemini 2.5 Pro also features a long context window, shipping with a capacity of 1 million tokens, with plans to expand to 2 million tokens soon. This extensive context window enables the model to understand large datasets and tackle complex problems from various information sources, including entire code repositories. To put it in perspective, 1 million tokens are roughly equivalent to 750,000 words or about 10 novels and much greater than competitors such as Claude 3.7 Sonnet and OpenAI ChatGPT 4.5 which operate nearer the 200,000 token mark.

Initially, Gemini 2.5 Pro Experimental was exclusive to Gemini Advanced subscribers through the Gemini app, Google AI Studio, and Vertex AI. However, in a surprise move on March 30, Google made the model available to all Gemini users for free, albeit with rate limits and a shorter context window. Following an enthusiastic response and positive feedback, particularly regarding its coding capabilities, an updated version, Gemini 2.5 Pro Preview (I/O edition), was released early on May 6 ahead of the Google I/O conference. The updated model is accessible via the Gemini API, Google AI Studio, Vertex AI, and the Gemini app on web and mobile devices, and the model transitioned from "experimental" to "preview" status in the Gemini app. Additionally, Google Workspace users with access to the Gemini app can utilize Deep Research with Gemini 2.5 Pro Experimental.

Why does it matter?

Gemini 2.5 Pro, particularly the updated Preview (I/O Edition), has significantly boosted Google's standing in the competitive AI landscape. The model now tops major LLM leaderboards, including LMArena and WebDev Arena, and outperforms rivals like OpenAI o3, Anthropic Claude 3.7 Sonnet, and DeepSeek R1 in reasoning and knowledge benchmarks, excelling in math and science tests and scoring higher on Humanity's Last Exam than several key competitors. Developers have praised its capabilities, with some switching from ChatGPT, noting its improved performance in coding benchmarks.

The release, then, of this LLM is seen as a pivotal moment, with Google now described as "playing to win" and "setting the pace" in the AI race having frequently been seen to lag after its initial groundbreaking work with the DeepMind team in 2017 and 2018. The early release of the I/O edition was partly driven by the intensifying competition from rivals like OpenAI, Anthropic, Meta, and xAI. Beyond benchmarks, developers report positive real-world experiences, preferring Gemini 2.5 Pro for its fast responses, deep reasoning, and lower hallucination rates compared to models like GPT-4 or Claude. These advancements have propelled Google to the forefront of the AI race, shifting the momentum decisively in its favor.

This article was co-created with AI.