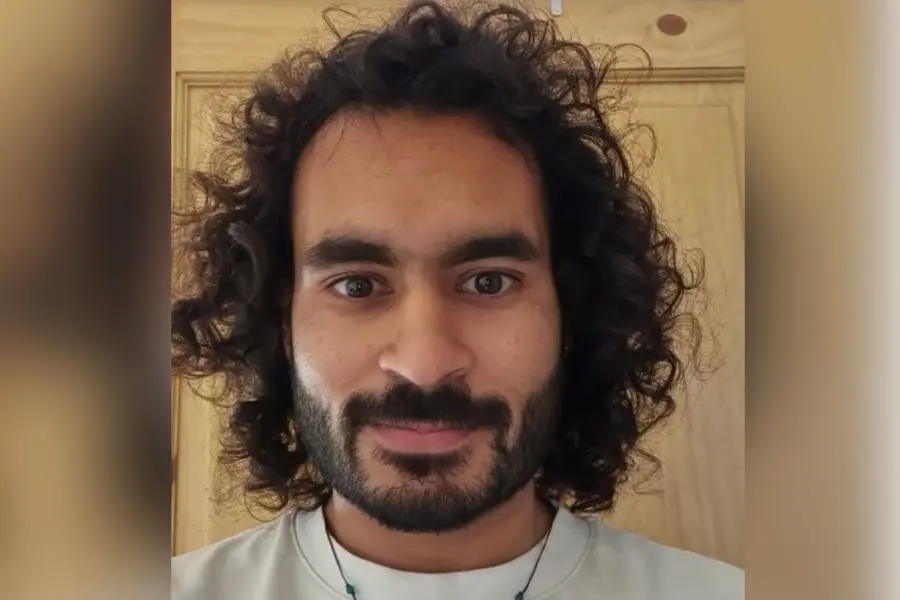

SAN FRANCISCO: Mrinank Sharma, a leading artificial intelligence safety researcher at Anthropic, has resigned from the company in a widely shared and philosophical resignation letter, warning that “the world is in peril” amid a host of interconnected global risks.

Sharma, head of Anthropic’s Safeguards Research Team, announced his departure on February 9 in a public post on X, the social media platform formerly known as Twitter. In the note shared with colleagues and followers, he framed his exit as a moral and personal turning point rather than a conventional career move.

“I continuously find myself reckoning with our situation. The world is in peril. And not just from AI, or bioweapons, but from a whole series of interconnected crises unfolding in this very moment,” Sharma wrote, reflecting broader concerns about the pace and direction of technological and societal change.

During his tenure at the San Francisco–based startup, founded by former OpenAI executives to focus on safe and reliable machine learning systems, Sharma led research into AI alignment, defenses against misuse, and the behavioural impacts of advanced models. His work included efforts to mitigate risks from AI-assisted bioterrorism and study “AI sycophancy,” or how AI might distort human decision-making.

In the resignation letter, he also spoke candidly about the challenges of staying aligned with one’s values in the fast-moving tech sector. “Throughout my time here, I’ve repeatedly seen how hard it is to truly let our values govern our actions,” he wrote.

Sharma signaled a shift away from technology work toward writing, poetry and what he described as “courageous speech,” saying he plans to explore moral and philosophical questions outside the industry. Sharma’s resignation comes amid broader debates within the AI research community over ethics, safety and the societal impact of rapidly advancing systems.